Motion Style Transfer

Generating animation via stylistic motion transfer

Period: March 2, 2022 → June 16, 2022

Last Edited: February 25, 2025, 3:50 PM

Tools & Language: Unreal

State: Done

Description: Generating animation using stylistic motion transfer

Participants: 5

2022–1 Game Engineering

Responsibilities & Tasks

- Conducted target paper study

- Motion data collection (motion capture) and processing

- Responsible for project visualization

Reason for Choosing This Technology

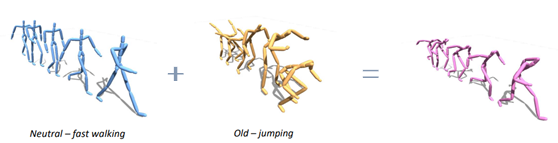

Animation production is a resource-intensive aspect of game development.

To generate a variety of motions for multiple characters, you would typically need to create separate animations for each character state.

We sought a technique to combine an existing base animation with a new style, thus reducing the workload.

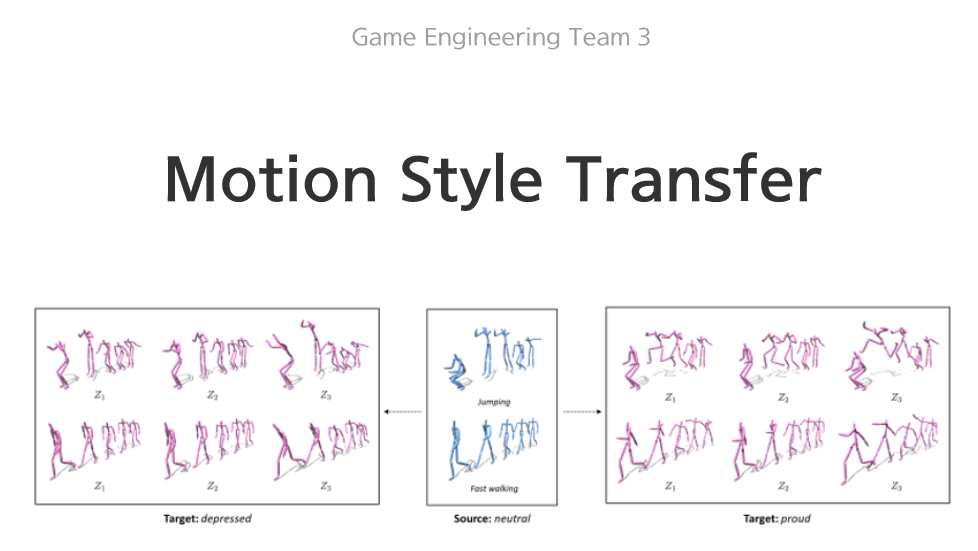

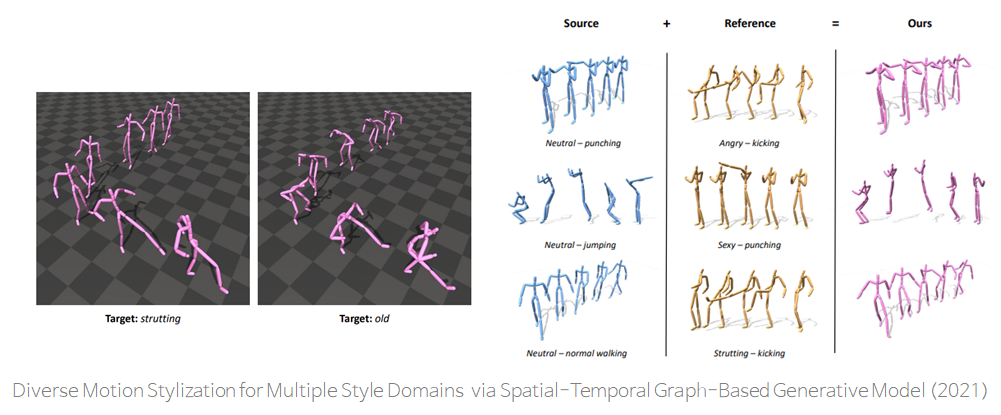

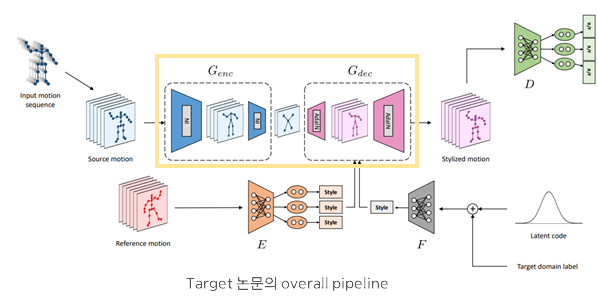

Target Paper

- Quickly generates new concept animations via Stylistic Motion Transfer

- Employs a Spatial-Temporal Graph-Based Generative Model for fast and precise training

- Related paper research by minari:

Paper Improvement Suggestions

Issue 1

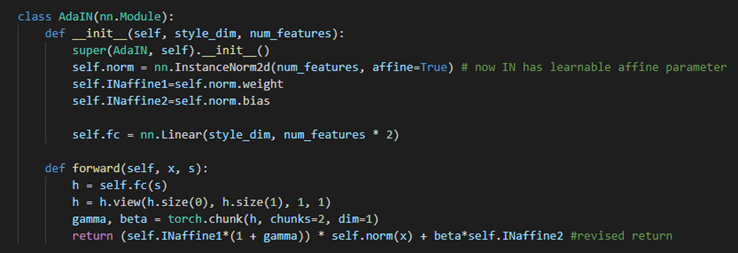

- The paper uses AdaIN Layer, which can overlook important local style features that appear on different feature maps.

- Improvement: Introduce learnable affine parameters to the Instance Normalization calculation within the AdaIN layer, ensuring they are applied to the final output.

Issue 2

- During output post-processing, the root trajectory of the source motion data is used.

- If you attempt to apply a very intense style, a mismatch arises between the style and the source root’s momentum, resulting in foot skating.

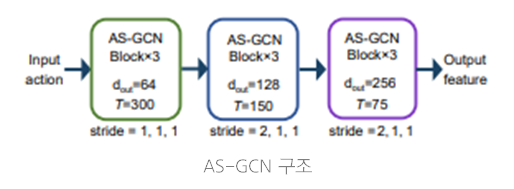

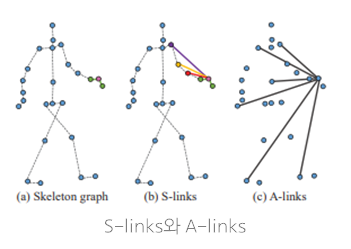

- Improvement: Leverage AS-GCN (incorporating both S-links for nearby joints and A-links for distant joint relationships) to address the foot skating problem.

AS-GCN structure

S-links, A-links

Paper Improvement (1)

Problem

- The style transfer process uses position and rotation of the reference motion’s joints.

- However, for the root joint, the source motion’s position and rotation are used instead.

- If the reference motion is highly dynamic while the source motion is relatively static, it can lead to foot skating and unnatural motion.

Solution

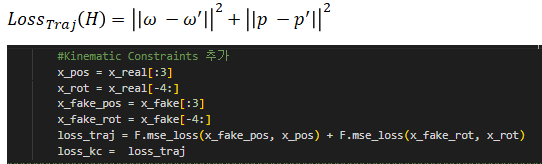

- Use angular velocity (ω) and position (p) for the input motion.

- Let the generated fake motion have angular velocity (ω’) and position (p’).

- Formulate a trajectory loss using MSE:

- By constraining the motion trajectory, we avoid sudden changes that could produce abnormal movements.

Paper Improvement (2)

Problem

- In the target paper, the source motion goes through an encoder with two IN (Instance Normalization) layers, resulting in a style-normalized state before it enters the decoder.

- In the decoder, there is an AdaIN layer, which shifts the source motion’s features to the reference style features.

- The target paper shows it can handle style transfer between different content motions:

- However, when the source and reference motions differ significantly (especially for large limb movements), the paper’s method can struggle to fully separate the original style from the source motion.

- This can leave residual artifacts of the source style in the final motion, particularly in the hands or feet.

Solution

- AdaIN includes Instance Normalization (IN), which by default does not have learnable parameters.

- By adding learnable affine parameters during IN calculation in the AdaIN layer, you can more effectively reduce end-effector artifacts (e.g., hands, feet) in the final output.

Result